When a drug is highly variable-meaning its effects differ dramatically from one person to the next-standard bioequivalence (BE) studies often fail. You can’t just give one group the generic and another the brand, wait for blood levels to peak, and call it a day. That approach works fine for stable drugs like metformin or lisinopril. But for drugs like warfarin, levothyroxine, or clopidogrel, where small changes in concentration can mean the difference between a clot and a bleed, you need something smarter. That’s where replicate study designs come in.

Why Standard Bioequivalence Studies Fall Short

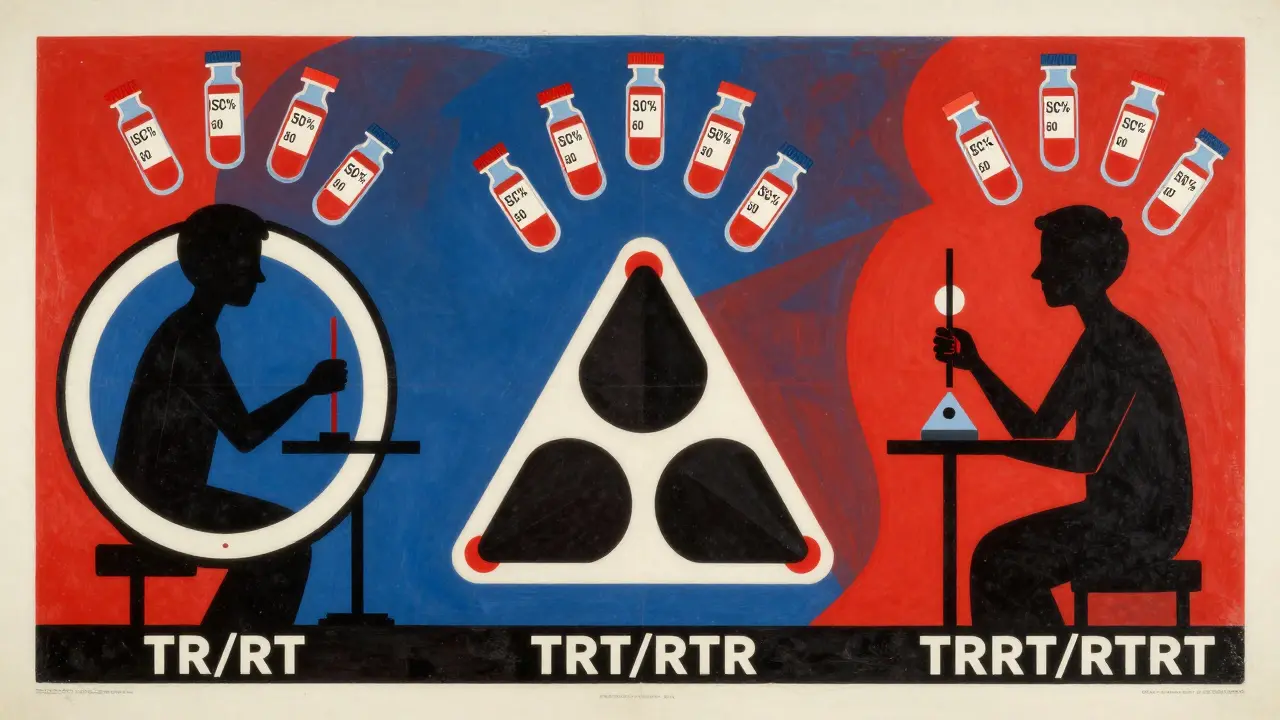

The classic two-period, two-sequence crossover (TR, RT) has been the gold standard for decades. It’s simple: half the subjects get the test drug first, then the reference after a washout; the other half do the reverse. It works well when the drug’s variability is low-under 30% within-subject coefficient of variation (ISCV). But when ISCV hits 40%, 50%, or even 60%, the sample size needed to detect equivalence becomes unrealistic. We’re talking 100+ subjects. That’s expensive. It’s slow. And ethically, it’s asking too much of volunteers. For example, a 2020 analysis showed that for a drug with 50% ISCV and a 10% formulation difference, a standard design needed 108 subjects to hit 80% power. A replicate design? Just 28. That’s not a small improvement-it’s the difference between a study that’s doable and one that’s impossible.What Are Replicate Study Designs?

Replicate designs aren’t just repeating the same thing twice. They’re structured to measure variability within each subject across multiple doses. The goal is to separate how much the drug varies from person to person (between-subject variability) versus how much it varies in the same person over time (within-subject variability). Only then can regulators scale the bioequivalence limits-something called reference-scaled average bioequivalence (RSABE). There are three main types:- Full replicate: Subjects get both test and reference drugs more than once. Common patterns: TRRT, RTRT (four periods), or TRT, RTR (three periods). This lets you estimate variability for both the test and reference products.

- Partial replicate: Only the reference is repeated. Patterns: TRR, RTR, RRT (three periods). You can’t measure test variability, but you can still scale based on the reference’s variability. The FDA accepts this for many HVDs.

- Non-replicate: The old TR/RT design. No repetition. Not allowed for HVDs anymore under FDA or EMA rules.

Regulatory Rules: FDA vs. EMA

The FDA and EMA both require replicate designs when ISCV exceeds 30%, but they don’t agree on everything. The FDA prefers full replicate designs, especially for narrow therapeutic index (NTI) drugs like warfarin. Their 2023 guidance on Warfarin Sodium mandates a four-period TRRT/RTRT design. They also allow partial replicates for non-NTI HVDs, as long as the statistical model is correct. In 2023, 68% of HVD BE studies submitted to the FDA used replicate designs-and approval rates jumped to 79% compared to just 52% for non-replicate attempts. The EMA is more flexible. They accept three-period full replicates (TRT/RTR) as the sweet spot for most HVDs. Their rules require at least 12 subjects in the RTR sequence to validate the design. They’re less likely to demand four-period studies unless the drug is an NTI. In 2023, 78% of approved HVD generics in Europe used replicate designs, with 63% using the three-period full replicate. This mismatch causes headaches for global sponsors. A study designed to meet FDA standards might get rejected by the EMA because it didn’t include enough RTR data. Or vice versa.

When to Use Which Design

Choosing the right replicate design isn’t guesswork. It’s based on expected variability and drug class.- ISCV < 30%: Stick with the standard two-way crossover. No need to overcomplicate.

- ISCV 30-50%: Go with a three-period full replicate (TRT/RTR). This is the industry’s most common choice. BioPharma Services’ 2023 survey of 47 CROs found 83% prefer this design for its balance of power and feasibility.

- ISCV > 50% or NTI drugs: Use a four-period full replicate (TRRT/RTRT). The FDA requires this for warfarin, dabigatran, and other high-risk drugs. It gives you the most data to ensure safety.

Statistical Analysis: The Real Challenge

The design is only half the battle. The analysis is where most studies fail. You can’t use a regular t-test. You need mixed-effects models that account for sequence, period, subject, and treatment effects. And you need to apply reference-scaling-adjusting the 80-125% bioequivalence range based on how variable the reference drug is. The go-to tool? The R package replicateBE (version 0.12.1, CRAN 2023). It’s open-source, validated by regulators, and used by nearly every CRO. Its documentation has been downloaded over 1,200 times in Q1 2024 alone. But learning it takes time. A 2022 AAPS workshop found analysts need 80-120 hours of focused training to use it properly. Common mistakes:- Using a model that doesn’t account for period effects

- Forgetting to check if the reference variability meets the 30% threshold before scaling

- Not validating the model with simulations

Industry Trends and Future Directions

Replicate designs aren’t going away. They’re becoming the norm. The global BE study market hit $2.8 billion in 2023, and replicate studies now make up 35% of HVD assessments-up from 18% in 2019. The FDA rejected 41% of non-replicate HVD submissions in 2023. That’s a hard message. WuXi AppTec, PPD, and Charles River dominate the market, but niche players like BioPharma Services are winning contracts by specializing in statistical rigor. Their clients don’t just want a study-they want a study that passes. Emerging trends include adaptive designs. Imagine starting with a three-period replicate, but if early data shows variability is lower than expected, you switch to a standard analysis. The FDA’s 2022 draft guidance supports this. It’s efficient. It saves time. And it’s already being tested by Pfizer, who used machine learning to predict sample size needs with 89% accuracy using historical BE data. The ICH is working on harmonizing global standards, with an update expected in Q3 2024. But until then, sponsors must navigate two sets of rules. A design that works in the U.S. might not fly in Europe. And vice versa.Real-World Successes and Pitfalls

One clinical operations manager on the BEBAC forum shared: “Our levothyroxine study used TRT/RTR with 42 subjects. Passed on first submission. Previous attempts with 98 subjects in a 2x2 design failed.” That’s the power of the right design. But there are pitfalls. Inadequate washout periods. Poor subject retention. Using the wrong statistical model. A 2022 AAPS white paper flagged these as the top three reasons replicate studies fail. The key is preparation. Know your drug’s variability before you start. Run simulations. Talk to statisticians early. Don’t wait until the last minute to figure out your analysis plan.Final Thoughts

Replicate study designs aren’t optional anymore for highly variable drugs. They’re the only way to get a generic approved without requiring hundreds of volunteers or compromising safety. The math, the regulations, the tools-they’re all in place. The challenge now is execution. If you’re planning a BE study for a drug with ISCV above 30%, don’t waste time on the old 2x2 design. Start with the right replicate structure. Invest in the right statistical expertise. And plan for dropouts. Because in bioequivalence, the difference between success and rejection often comes down to one thing: how well you measured variability.What is the minimum sample size for a three-period full replicate BE study?

Regulatory agencies require at least 24 subjects total for a three-period full replicate design (TRT/RTR), with at least 12 subjects completing the RTR sequence. However, due to typical dropout rates of 15-25%, most sponsors recruit 30-35 subjects to ensure enough analyzable data.

Can I use a partial replicate design for an NTI drug?

No. The FDA explicitly requires a full replicate design (TRRT/RTRT) for narrow therapeutic index (NTI) drugs like warfarin, dabigatran, or levothyroxine. Partial replicates don’t provide enough data on test product variability, which is critical for safety in NTI drugs.

Why do replicate designs reduce sample size?

Replicate designs allow regulators to scale the bioequivalence acceptance range based on the reference drug’s within-subject variability. This means you don’t need to prove equivalence to the same strict 80-125% range if the reference is naturally variable. The wider limits reduce the statistical burden, so fewer subjects are needed to detect true equivalence.

What software is used to analyze replicate BE studies?

The R package replicateBE (version 0.12.1) is the industry standard. It’s validated, open-source, and accepted by the FDA and EMA. Other tools like Phoenix WinNonlin can also be used, but they require custom programming to implement reference-scaling correctly. Most CROs rely on replicateBE for its reliability and regulatory alignment.

Are replicate designs more expensive than standard designs?

Yes, per subject-because they involve more doses and longer study durations. But overall, they’re often cheaper. A standard design for a highly variable drug might need 100+ subjects. A replicate design needs 24-48. When you factor in recruitment, monitoring, and drug supply costs, the total study cost is usually lower with a replicate design, despite the added complexity per subject.

What’s the biggest mistake in replicate BE studies?

Using the wrong statistical model. Many teams assume a standard ANOVA will work. It won’t. You need a mixed-effects model with proper random effects for subject and period. Without it, the reference-scaling calculation is invalid, and the study will fail. The second biggest mistake? Underestimating dropout rates. Always over-recruit by 20-30%.

Wendy Claughton

January 19, 2026 AT 09:17Wow, this is such a crucial topic-especially for anyone working with drugs like levothyroxine. I’ve seen so many studies fail because teams just assumed the old 2x2 design would work. It’s like trying to measure wind speed with a ruler. The math here isn’t just fancy-it’s life-or-death. I’m glad someone finally laid it out this clearly.

Aysha Siera

January 20, 2026 AT 23:15They’re hiding something. Why do only big pharma get to use these fancy designs? Small labs? We get stuck with the broken system. The FDA doesn’t care about you. They care about patents.

Pat Dean

January 21, 2026 AT 10:51Why are we letting Europeans dictate how we run clinical trials? We invented modern medicine. Now we’re bending over backward for EMA’s ‘sweet spot’ nonsense. This is why American innovation is dying.

Jay Clarke

January 23, 2026 AT 08:56Bro. I did a four-period study last year. 42 people. 3 dropped out after period 2. The stat guy used replicateBE and somehow made it work. We got approved on the first try. But man-those 80 hours of training? I cried. Twice. Also, the coffee machine broke mid-study. Coincidence? I think not.

Selina Warren

January 23, 2026 AT 16:19STOP using outdated methods. If your drug has ISCV over 30%, you’re not doing science-you’re gambling with people’s lives. Replicate designs aren’t optional. They’re the bare minimum. Stop pretending you’re saving money. You’re just saving time at the cost of safety.

Robert Davis

January 25, 2026 AT 10:31Interesting. But I’ve seen replicateBE fail on datasets with missing values. The package assumes perfect data. Real life? Not so much. I’ve had to rewrite half the code just to get it to run. And don’t get me started on the EMA’s 12-subject RTR requirement-it’s arbitrary. Who decided that? A committee? In a basement?

Eric Gebeke

January 26, 2026 AT 16:08Of course they’re pushing replicate designs. More data = more paperwork = more billing hours for consultants. They don’t care if it’s better-they care if it’s billable. This whole thing is a money machine wrapped in science jargon.

Joni O

January 28, 2026 AT 04:00Just wanted to say thank you for this. I’m a new stat analyst and this is the first time I’ve seen it explained without 500 pages of jargon. I’m going to print this and put it on my wall. Also-yes, over-recruit. I learned that the hard way. 24 subjects? We ended up with 16. Almost had to restart. 😅

Ryan Otto

January 28, 2026 AT 18:51The entire framework is a statistical illusion. Reference-scaled bioequivalence is a mathematical sleight-of-hand designed to mask the fact that generics are not truly equivalent. The regulators know this. The CROs know this. The public? Still asleep.

Max Sinclair

January 30, 2026 AT 16:04Great breakdown. I especially appreciate how you highlighted the dropout issue. I’ve been in meetings where people act like 15% attrition is ‘normal’-it’s not. It’s a red flag. Always plan for 25%+ loss. And yes, replicateBE is the way to go. Just make sure your team actually knows how to use it.

Praseetha Pn

January 31, 2026 AT 06:08Let me tell you what they don’t tell you-replicate designs are a trap. They look efficient but they’re just a longer leash for Big Pharma to control the narrative. The real issue? The reference drug isn’t even standardized across batches. So you’re scaling to a moving target. And nobody talks about that. Because then the whole house of cards falls.

Tyler Myers

January 31, 2026 AT 09:40So you’re telling me the FDA’s 2023 guidance on warfarin is based on a 28-subject study? That’s not science-that’s a spreadsheet fantasy. I’ve worked in 12 different labs. I’ve seen what happens when you trust models over real-world data. People die. And then the lawyers show up. This whole replicate thing? It’s just a way to fast-track generics under the guise of ‘efficiency.’ I’ve seen the data. It’s not clean. It’s curated.

And don’t get me started on replicateBE. Open-source? Sure. But who validated it? A grad student? A contractor in Bangalore? The FDA doesn’t audit the code. They just accept the output. That’s not regulation. That’s wishful thinking.

And why do we still use the same 80-125% range as a baseline? That number came from the 1980s. Back when people didn’t have smartphones. Back when ‘bioequivalence’ meant ‘looks similar.’ Now we have CRISPR and AI. But we’re still using a 40-year-old math trick to approve life-saving drugs.

And you think the EMA’s 3-period design is better? Please. They’re just trying to keep their budget low. They don’t care if the drug works. They care if it passes the test. There’s a difference.

And the worst part? The people who actually take these drugs? They’re the ones who suffer when it fails. No one’s asking them. No one’s listening. We’re just optimizing spreadsheets while patients bleed out.

So yeah. Go ahead. Use replicateBE. Run your four-period study. Submit your 79% approval rate. But don’t pretend you’re protecting public health. You’re just playing the game.